Neural image rendering

Novel view synthesis

We want to construct novel views from one or more source images. Ie, given these images, can we move the camera?

You have a couple of options:

- Do you want 3D geometry? You can start by

- View synthesis from a single image using ground-truth depth or semantics

- View synthesis from regular pictures. No depth, no semantics.

- Purely imaginary view synthesis - Generative models work

1. Representing 3D structure

One way of representing 3D structure is depth. Assuming there is no ground-truth depth available for your images, maybe you can guess depth somehow. Getting good depth estimates from a single image is an entire subfield by itself! ("single image depth estimation")

In recent work like SynSin, we learn this by creating differentiable renderers. The renderer incorporates 3D priors into the pipeline.

An interesting approach would be to see if we can do the same thing without this renderer. If so, we'll need to represent 3D structure in another way, in order to feed it to the second part

2. Generating images

This bit is more flexible :)

References

The main idea of SynSin is that there's a differentiable renderer which allows efficient learning of image-to-point-cloud feature correspondences. Using a point cloud (via a depth network) also allows you to do explicit 3D scenes.

Pretty cool!

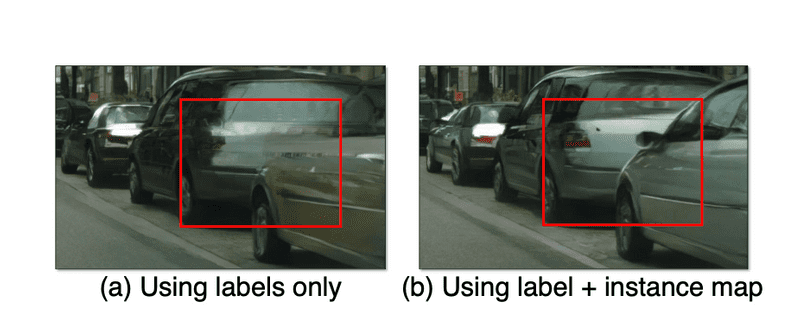

High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs (2018)

Can generate images of KITTI and CityScapes!

Monocular Neural Image based Rendering with Continuous View Control

In this paper, we propose a novel learning pipeline that de- termines the output pixels directly from the source color but forces the network to implicitly reason about the underlying geometry

To do this, we do two things:

- Make an encoder which can model viewpoint changes: they do this by using a transforming autoencoder. By explicitly rotating (e.g. multiplying by a matrix) the latent vector during training, it can be taught to be interpretable in that way. So during test time, we can feed it a test vector and it'll produce a depth map of a transformed perspective.

- Use the target depth map to render the image! The hard part is pretty done, now we just have to use the result to render an image. Basically pick which colors go to which pixels, depending on this super useful depth we get.

A lot of these works cite [MaximTatarchenko,AlexeyDosovitskiy,andThomasBrox], who did this thing in 2016.

KITTI In total there are 18560 images for training and 4641 images for testing. We construct training pairs by randomly selecting target view among 10 nearest frames of source view. The relative transformation is obtained from the global camera poses

Birds

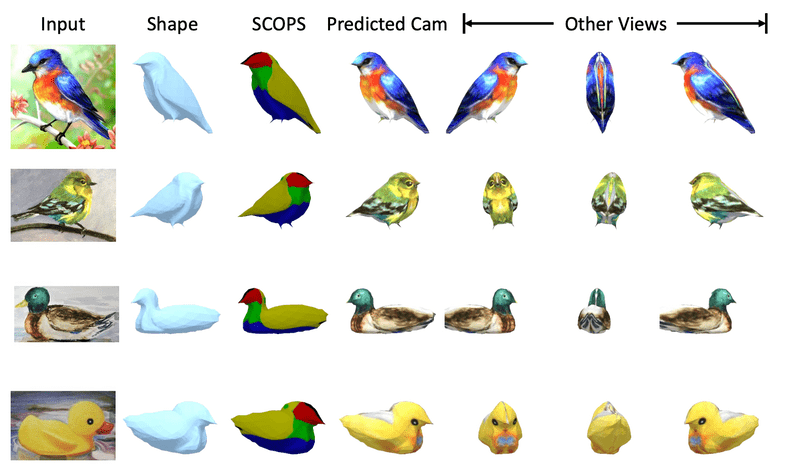

Self-supervised Single-view 3D Reconstruction via Semantic Consistency

Predicting depth first

Another way to do 3D reconstruction is to predict the depth from input images. This is a pretty common approach.